Pedagogy of Artificial Intelligence: Overfitting and Complacency of Intelligence

Intro

The recent release of ChatGPT o1, or “Strawberry” shows a great development in Large Language Models and their ability to pass our tests are being fine tuned day by day, breaking former results time and time again. So, what’s the issue? Everything’s supposedly going great. But is it? In this article, I would like to shed a little doubt on the results we have been seeing, especially when it comes to Large Language Models and the way we have come to measure them.

The way we measure LLM’s today is a bit sporadic and I can’t say anyone agreed on a certain set of guiding principles, rather that we just looked for what was practical and to some capacity what had the most utility from an Engineering point of view. For example, take the Benchmark Massive Multitask Language Understanding (MMLU). The idea of the benchmark is to measure a model’s ability to reason across multiple domains (e.g: Biology, Philosophy, Math, Physics, etc.). Actively, what this benchmark tests is a model’s ability to answer questions about a wide range of topics. The most straight forwardway to test a model for it’s MMLU score is to give it a multiple-choice question. In short; they force the LLM to undergo an S.A.T exam and see how well it performs.

Similarly, when we look at other types of benchmarks that wish to deal with a model’s ability to reason, it is usually one similar to “AI 2 Reasoning Challenge” (ARC). In comparison to MMLU what this model asks is a series of logical questions that test the model’s ability to ‘hold a thread of reason’. Something that would ask about placing a glass bowl on a hot pan, doing a series of slightly complex actions that usually should have an effect on the subject (glass bowl) and then revisiting whether the glass bowl would be hot or not, located at some weird spot in the kitchen and so forth, to see if the model gets lost after a few changes.

And so the list of benchmarks goes on. One after the other, they promise to measure a model’s ability to perform. But this provokes an important line of questions. What are we actually measuring with these benchmarks? Who is in the driver seat here? What is it that we are now in the process of creating?

1.) What are we actually measuring with these benchmarks?

It’s important to be able to understand what we’re attempting to measure when we try to measure it. As I argued earlier in this essay, in general, we are yet to have a potent definition of intelligence. Starting off from that point, it might seem a bit absurd to then try and measure it. One argument can be launched that IQ is a good measurement of intelligence. Similarly, the formerly mentioned S.A.T scores. Yet, both (and many) of these approaches of measuring intelligence face detailed and historical scrutiny. However, to not involve much unnecessary argumentation, I would instead defer to a more naive line of reasoning which is

“Answering multiple choice questions correctly does not constitute intelligence.”

Now, I do not claim that this is what many of the people using these benchmarks would even claim. Nor is anyone claiming that it’s a miracle of intelligence to be able to remember that a glass bowl on top of a hot pan will transfer heat regardless of where it’s located (unless it’s water, etc.). But then why do these benchmarks exist? What are they measuring? I believe those can be answered by answering the next question.

2.) Who is in the driver seat?

To better understand why these benchmarks exist, and what they are actually aiming to measure, it’s important to understand who uses them. Shortly; Engineers. Naturally, there is nothing inherently wrong with engineers being in the drivers’ seat, that’s not one of the concerns I would have, although I can imagine a few people who would already be convinced of my argument. But (Data)Engineers driving these benchmarks comes with a few side effects. Whether it’s engineers who are producing and developing this technology or the engineers that are developing technologies on top of it and using it. They are the ones that ultimately decide whether a model is worth using or not, and today, we can see that reflected in the benchmarks that we use.

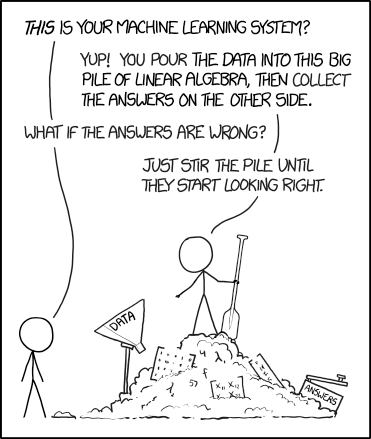

Ultimately, what we are measuring today is a model’s complicity to a specific framework. Although on a larger scale, this complicity is more towards the current trend of pedagogy, but as it narrows down to the level of producing products, this framework ultimately becomes the mental landscape of the engineer that is using the model. In practicality, the MMLU which is formerly meant to question a model’s ability to recall scientific matters, will only reflect a few things to the engineer. The model’s ability to return text as they wish and the model’s capacity to hold and process information that the engineer had given it. This cold and pragmatic approach to LLM’s and Artificial Intelligence and how we measure their ‘performance’ also reflects on which benchmarks are tested to begin with, which leads us to conclude that a lot of these benchmarks which scientists are today trying to excel at trend towards a form of complacency and do not seem to be headed towards intelligence. In conventional machine learning terms: They are over-fitting the model towards specific results and are ruining the over-all accuracy of the thing the model was meant to imitate; intelligence. Just like overfitting in machine learning reduces generalization to new data, these benchmarks may train LLMs to excel at highly specific tasks but fail to push toward broader capabilities, such as true reasoning, creativity, or understanding

3.) What is it that we are now in the process of creating?

There’s no doubt, we live in exciting times. I still occasionally look at models generate text and yell: “IT’S ALIVE!”. But much like every parent, I think I have a bit of a concern about what we have brought into this world. And on a more shallow level, I’d at least like it to be creative, smart, capable and cool (everything I’m not). As we continue refining LLMs, it feels as though we’re nurturing a model that behaves more like a ‘teacher’s pet’ — good at repeating answers it’s been trained on but lacking the independence to truly learn. Let’s consider this through the analogy of two students.

The first student: Is a student with excellent grades, who follows their studies regularly and takes in the material at great capacity and even remembers the deep nuances provided by their teacher about all the topics, which they reflect in their exam.

The second student: Is a student with reasonable grades, who does not follow their studies regularly and in fact often sleep in class. Yet, when provided with a new task (not far beyond their abilities) they can piece the puzzle together and provide correct answers. For example; with knowledge of basic addition and subtraction and a definition of multiplication, this student would be able to surmise what “4x2=?” would result in.

In the way we are training our models today, we are actually telling the model that “4x2=8” a million times in the hopes that it would remember it. In fact, I remember doing this same thing when I was trying to remember the multiplication table. This approach shows real results, which is what we would have with the example of the first student. This same technique can be applied to training a model and can result in excellent answers to even very complex mathematical questions. On the other hand, training a model in the way I am conceiving the ‘second student’ would be able to find out that “4x2=8” while failing to solve a more complex formula.

My argument here would be that it is preferable to have a slightly less accurate model that can answer a much wider range, and often provide out of the box solutions, rather than a model that reflects what we already know. Using my analogy, I would be arguing that “The Second Student’s” ability to creatively find out multiplications using the knowledge they had before and the rules that were provided is a much closer image to intelligence than “The First Student”, which would be an over-fitted model that cannot answer whether “Strawberry” has 2 or 3 instances of the letter “R” without having been expressly trained on that topic. By focusing on benchmarks that reward the first student’s approach, we risk creating models that are highly accurate on narrow tasks but miss out on the broader capacity to think, adapt, and solve new problems. In contrast, fostering a model that mimics the second student would push us closer to developing a system that isn’t just trained on answers — but one that can learn and think on its own

In Conclusion

By introducing these benchmarks, we’ve unknowingly brought about unintended side effects. Instead of edging closer to true artificial intelligence, we’ve created models that excel at repeating what they’ve been taught, not at thinking for themselves. In a sense, we’ve turned our LLMs into teacher’s pets — obedient and precise, but incapable of adapting when faced with the unexpected.

At this rate, we’re not breaking into the future of intelligence — we’re just perfecting a feedback loop that mirrors the limitations of human pedagogy. We’ve asked our models to cram, to memorize, and to repeat, only to realize that the benchmarks we designed to measure progress are simply reinforcing the same narrow outcomes we’ve seen before.

The irony, of course, is that in our efforts to create artificial intelligence, we’ve only managed to replicate the patterns of human learning that are most criticized: overfitting, rote memorization, and, ultimately, a lack of true understanding. And perhaps that’s the real question we need to ask ourselves — are we interested in building intelligence, or are we just trying to build better students for the tests we’ve created?